Lesson 13: Different Approach: Passing ALL Database Data

As a bonus mention, we wanted to share a different approach for smaller databases: passing all data to GPT instead of forming queries.

How Does It Work?

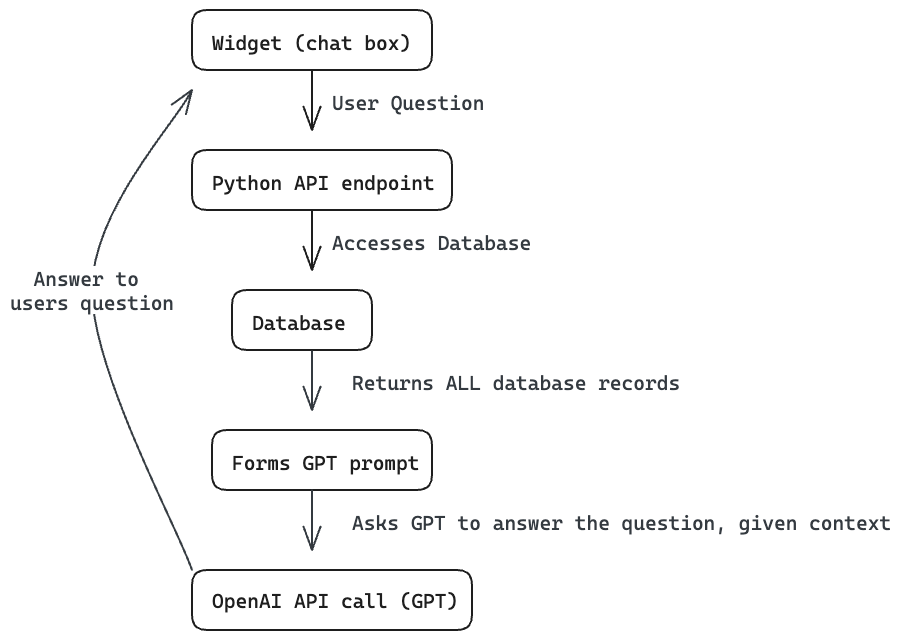

The idea here is to load all the information from the database at once and form a new GPT prompt. This way, we don't make multiple calls to the GPT engine, which makes this method faster (in theory!) and cheaper. Here's the overview:

As you can see, this workflow is similar to the previous one but less complex. Main aspects that change (besides multiple calls):

- We don't have to ask GPT to generate a query - such generator also has a big chance of failure.

- GPT works with all the data at once - this means that it might get different results

- There is one GPT prompt - improving the speed of the chatbot (each GPT call can take a few seconds)

- Simplified queries - we don't rely on GPT queries. We are just selecting the data manually

That said, let's build it and see how it works.

Building the Chatbot

Let's actually build it. To do that, we will follow the same steps as in the previous example, but with some differences:

- We will modify the

chatbot.pyfile - We will create a new

database.pyfile - We will remove the

ai_engine.pyfile (as we won't use it)

Let's start with the chatbot.py file. We will modify it to look like this:

src/chatbot.py

import databaseimport chat_historyfrom openai import OpenAI def make_ai_request(question, identifier): messages_list = [] prompt = database.get_prompt_template_from_database() messages_list.append({"role": "system", "content": prompt}) # Find the chat log in SQlite database history = chat_history.retrieve_history(identifier) # If the chat log is more than 1 message, add the chat history to the prompt if len(history) > 1: messages_list.append({"role": "system", "content": "Chat history:" + '\n'.join([f'{message[1]}: {message[2]}\n' for message in history])}) # Add the chat history to the prompt messages_list.append({"role": "user", "content": question}) chat_history.write_history(identifier, "user", question) client = OpenAI() response = client.chat.completions.create( model="gpt-3.5-turbo", messages=messages_list, ) ai_response = response.choices[0].message.content.strip() chat_history.write_history(identifier, "assistant", ai_response) return ai_responseAs you might notice, it looks similar to the previous example but with a few differences:

- We are forming a prompt with

database.get_prompt_template_from_database(). - We are calling ChatGPT with

client.chat.completions.createand passing the prompt as amessagesparameter along with the history

And that's it for this part. But wait, how are we forming the prompt? Well, we have to create the database.py file and add the following code:

src/database.py

import osimport mysql.connector def transform_data(cars, car_addons, addon_categories): """ Transforms the database data into a query for the GPT prompt :param list cars: :param list car_addons: :param list addon_categories: :return: """ car_data = {} categories = {} for category in addon_categories: categories[category[0]] = { 'name': category[1] } for car in cars: car_data[car[0]] = { 'id': car[0], 'name': car[1], 'model': car[2], 'year': car[3], 'type': car[4], 'drivetrain': car[5], 'addons': {} } for category in addon_categories: car_data[car[0]]['addons'][category[1]] = [] for addon in car_addons: car_data[addon[1]]['addons'][categories[addon[2]]['name']].append({ 'name': addon[3], }) car_stock_string = "" for car in car_data: if car_stock_string != "": car_stock_string += "---\n" car_stock_string += f"{car_data[car]['name']} {car_data[car]['model']} from {car_data[car]['year']}.\n" car_stock_string += f"It is a {car_data[car]['type']}.\n" car_stock_string += f"It is driven by {car_data[car]['drivetrain']}\n" car_stock_string += f"It has the following addons:\n" for category in car_data[car]['addons']: car_stock_string += f"{category}:\n" for addon in car_data[car]['addons'][category]: car_stock_string += f"- {addon['name']}\n" prompt = f"""You are working in a car dealership. Information about all the cars in our stock is provided in the Context section of the question. Your main job is to help a customer end up with a car model that would fit his needs. You should ask for more information about what type of car the person wants, but you should not exceed the information given in the context. Do not reply with any information that is not from our context. If you don't know what the customer meant - ask for more details, but never trust any other source than our Context. Addons are "perks" on the car, not modifications. These come as standard from factory Keep answers short and direct, make them seem like a salesman --- Context: {car_stock_string}---""" if not os.path.exists('../storage'): os.mkdir('../storage') with open('../storage/prompt.txt', 'w') as file: file.write(prompt) file.close() def load_database(): connector = mysql.connector.connect( host=os.getenv('DB_HOST'), user=os.getenv('DB_USER'), password=os.getenv('DB_PASSWORD'), database=os.getenv('DB_DATABASE') ) cars_query = connector.cursor() cars_query.execute("SELECT * FROM cars") cars = cars_query.fetchall() addons_query = connector.cursor() addons_query.execute("SELECT * FROM car_addons") car_addons = addons_query.fetchall() addon_categories_query = connector.cursor() addon_categories_query.execute("SELECT * FROM addon_categories") addon_categories = addon_categories_query.fetchall() connector.close() transform_data(cars, car_addons, addon_categories) def get_prompt_template_from_database(): """ Get the dataset from the database file """ # Check if dataset file exists, if not, create it if not os.path.exists('../storage/prompt.txt'): load_database() with open('../storage/prompt.txt', 'r') as file: return file.read()Let's look at what's happening here:

- We are connecting to the database and fetching all the data from the

cars,car_addons, andaddon_categoriestables - We are transforming the data into a string that will be used as a prompt for GPT

- We are saving the prompt to a file and returning it - this saves us from having to transform the data every time we want to use it

And if we take a look at our prompt.txt file, we can see that it looks like this:

You are working in a car dealership. Information about all the cars in our stock is provided in the Context section of the question. Your main job is to help a customer end up with a car model that would fit his needs. You should ask for more information about what type of car the person wants, but you should not exceed the information given in the context. Do not reply with any information that is not from our context. If you don't know what the customer meant - ask for more details, but never trust any other source than our Context. Addons are "perks" on the car, not modifications. These come as standard from factory Keep answers short and direct, make them seem like a salesman --- Context: BMW M3 from 2018.It is a sedan.It is driven by RWDIt has the following addons:Interior:- M Performance ExhaustExterior:- M Performance Carbon Fiber Rear Spoiler- M Performance Carbon Fiber Front Splitters---BMW M4 from 2018.It is a coupe.It is driven by AWDIt has the following addons:Interior:- M Performance ExhaustExterior:- M Performance Carbon Fiber Rear Spoiler- M Performance Carbon Fiber Front Splitters---Audi A1 from 2015.It is a coupe.It is driven by FWDIt has the following addons:Interior:- Infotainment SystemExterior:- Audi Sport Carbon Fiber Rear Spoiler- Audi Sport Carbon Fiber Front Splitters---Volkswagen Polo from 2010.It is a hatchback.It is driven by FWDIt has the following addons:Interior:- Leather Seats- Heated SeatsExterior:- LED Headlights- Tow bar---Volkswagen Golf from 2015.It is a hatchback.It is driven by FWDIt has the following addons:Interior:- Leather Seats- Heated SeatsExterior:- LED Headlights- Tow bar---Volkswagen Golf from 2015.It is a hatchback.It is driven by FWDIt has the following addons:Interior:- Alkantara Seats- Heated/Cooled SeatsExterior:- LED Headlights- Sport Exhaust---Volkswagen Passat from 2020.It is a hatchback.It is driven by FWDIt has the following addons:Interior:- Leather Seats- Heated SeatsExterior:- LED Headlights- Tow bar---Volvo S90 from 2023.It is a sedan.It is driven by AWDIt has the following addons:Interior:- Leather Seats- Heated/Cooled SeatsExterior:- LED Headlights- Roof Rack---Mercedes C63 AMG from 2023.It is a sedan.It is driven by RWDIt has the following addons:Interior:- Alkantara Seats- Heated/Cooled Seats- Carbon Fiber Steering WheelExterior:- Laser Headlights- Side Skirts---Fiat 500 from 2013.It is a hatchback.It is driven by FWDIt has the following addons:Interior:- Leather Seats- Automated GearboxExterior:- LED Headlights- Tow bar ---We have passed all the information to chatGPT directly; all it has to do is figure out what the user wants and provide the best possible answer.

Conclusion

Theoretically, this example uses one less GPT call and could cost less. But simultaneously, it requires more data to be passed to GPT and might increase the cost and response time. It's a trade-off you must consider when building your chatbot, but both methods are valid with pros and cons.

You can find the full code of this course in GitHub repository.

- Intro: Structure and Preparation

- AI Engine and Main Python Script

- Front-end JavaScript Widget

- Bonus

No comments or questions yet...