Lesson 5: AI Engine: Query OpenAI API

We can now query the OpenAI API with our prompt, so we will add the query_open_ai() function. This function will send the prompt to the OpenAI API and return the response to us.

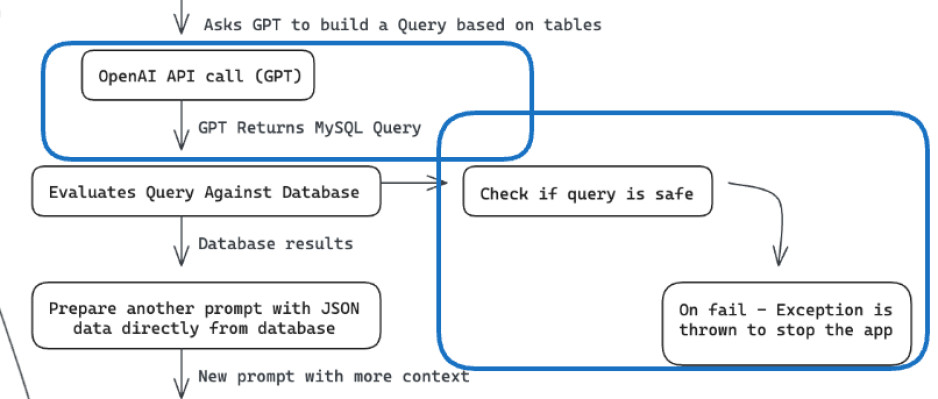

It will be the following application part:

ai_engine.py

from openai import OpenAI # ... def query_open_ai(prompt, temperature=0.7): client = OpenAI() completions = client.chat.completions.create( model="gpt-4-1106-preview", messages=[ { "role": "system", "content": prompt } ], temperature=temperature, max_tokens=100, ) return completions.choices[0].message.content# ...In this case, we told the OpenAI API to use the gpt-4-1106-preview model and to send the prompt we created. We also set the temperature to 0.7, which is a way to control the randomness of the response. The lower the temperature, the more predictable the response will be.

Making Sure Queries are Safe to Run on Database

Finally, we need to add a way to check if the query is safe to execute. We don't want to execute DROP TABLE or DELETE queries, so we will add the query_safety_check function:

# ... def query_safety_check(query): banned_actions = ['insert', 'update', 'delete', 'alter', 'drop', 'truncate', 'create', 'replace'] if any(action in query for action in banned_actions): raise Exception("Query is not safe") # ...Now we are ready to test our AI engine!

Lessons

- Intro: Structure and Preparation

- AI Engine and Main Python Script

- Front-end JavaScript Widget

- Bonus

No comments or questions yet...