Lesson 1: 01 - Intro: Tools and Plan of Action

The most typical usage of AI these days are chatbots, personal assistants, or customer support agents. In this tutorial, let's build a chatbot that would answer customer's questions based on our answers from the FAQ document.

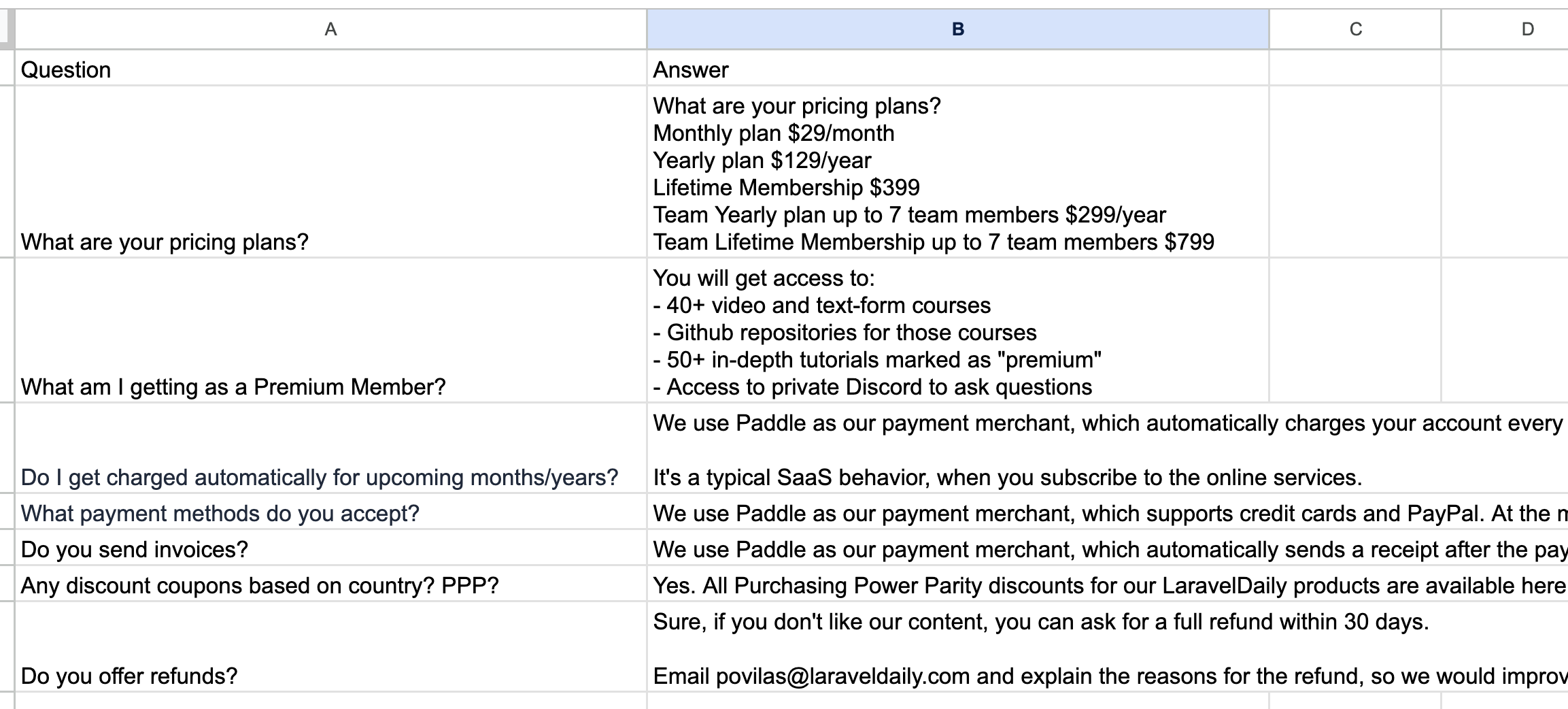

Our "knowledge base" FAQ will be a CSV file with two columns - question and answer.

You can download this initial CSV here.

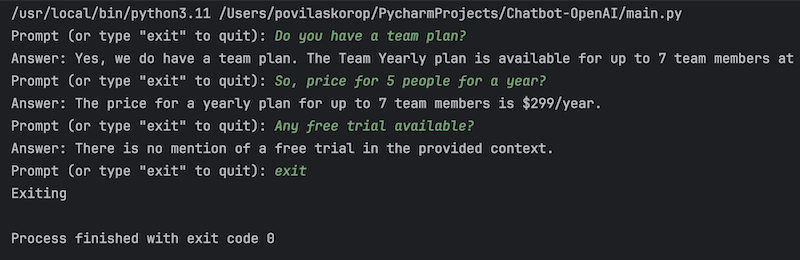

This is the result of the Terminal-based chatbot we will build:

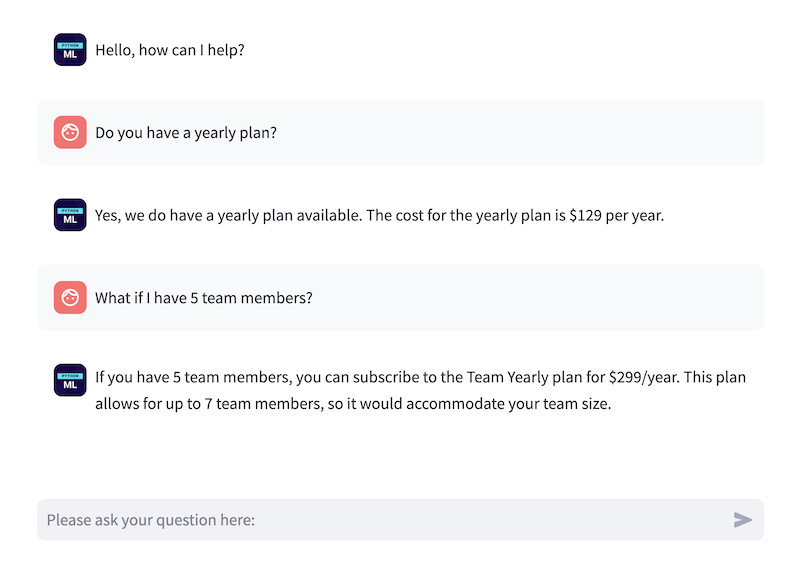

And also we will build a web version:

Intrigued? Let's begin!

General Plan of Action

We will use two main tools:

- Large Language Model GPT-3.5 Turbo by OpenAI (yes, it's not free, and we will calculate the costs)

- And the LangChain framework to work with our data and the LLM with just a few lines of code

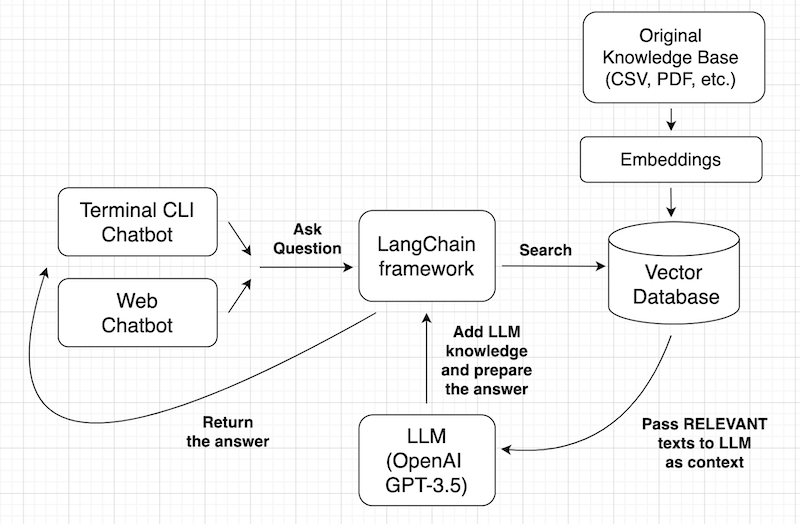

Our chatbot will consist of several essential components:

- Initial knowledge base (CSV, PDF, etc.)

- Vector database to store that knowledge base texts (transformed to embeddings)

- Large Language Model (LLM) that will formulate the answer

- LangChain framework as a "middle-man" to tie it all together: get the query, launch the search, pass the results to LLM, return the result

Here's the overall "big picture" of how the process will work:

Don't worry if you don't understand it right away. It's just an overview. I will discuss each of those parts step-by-step.

In general, this approach is called RAG which stands for "Retrieval Augmented Generation". It means that we retrieve only relevant information from our knowledge base, pre-processing it to put as an additional context to the LLM like OpenAI GPT.

Install Libraries

In this tutorial, we will use a few external Python libraries, so you may want to install them upfront:

pip install tiktokenpip install streamlitpip install langchainpip install langchain-communitypip install langchain-corepip install langchain-openaipip install langsmithpip install faiss-cpuI will discuss each of them in detail later below.

In general, if you create a chatbot, you have so many tools to choose from!

- You can use various paid/free LLM models - not only GPT

- You may use various frameworks like LangChain or Llamaindex

- You may choose from dozens of vector databases and embedding libraries

- You may or may not split the original documents into chunks

- etc.

I will briefly mention those alternatives in each chapter where applicable, but you should understand that in this tutorial, we will focus on only one set of tools.

The goal is to give you a general understanding of how chatbots work with LLMs and external data sources. With that knowledge, you could experiment with other tools that accomplish this task.

Now, let's start writing Python code in the next lesson.

The final code will be available in the repository: the link is at the end of the final lesson.

No comments or questions yet...