When working with OpenAI and GPT models, it's crucial to calculate the costs. In this tutorial, I will show you how to check the costs of the API calls, both humanly and automatically, with Python and LangChain.

Just Check Usage Dashboard

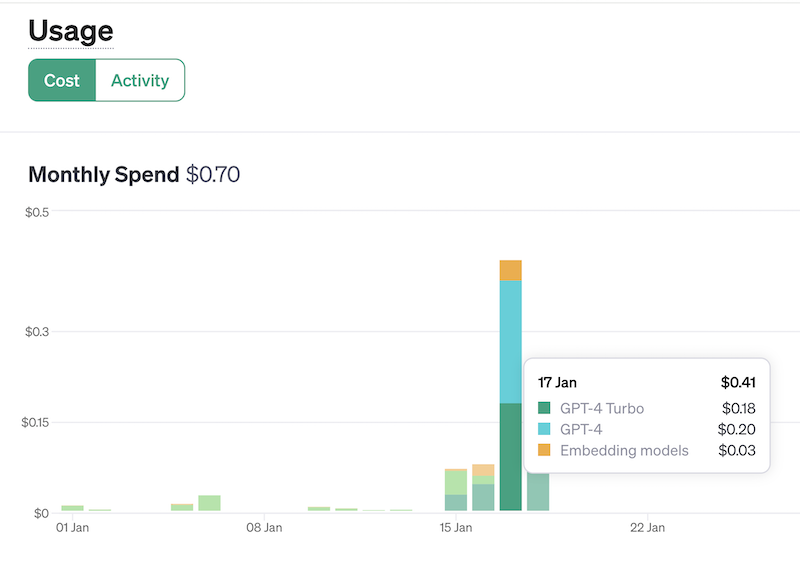

Of course, all API calls to OpenAI are logged on their system and shown on the dashboard if you log in:

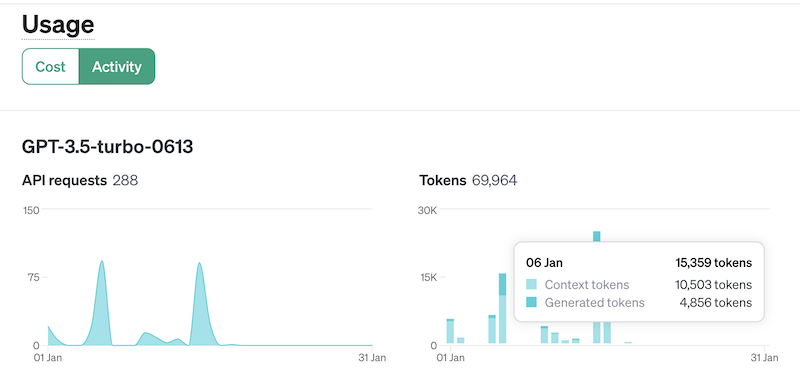

You can also conveniently view the money spent and token activity per model.

However, there are two problems with this approach:

- You get only the aggregated data. It's hard/impossible to calculate how much the individual API calls cost.

- Data is refreshed not in real-time. During my testing, I had to wait 10-20 minutes for the activity to appear on the dashboard.

If you don't care that much about real-time or individual call usage, this dashboard is your friend.

But if you want to dive a bit deeper...

Calculate API Request Cost with LangChain

I will show you the Python code based on my Chatbot GPT-3.5 course example.

With LangChain, the call to the OpenAI API was just about using the ChatOpenAI() as LLM:

chain = ConversationalRetrievalChain.from_llm(llm=ChatOpenAI(), retriever=vectorstore.as_retriever(), memory=memory)response = chain({"question": "Do you have a yearly plan?"})To calculate the cost of that response, we can use a function called get_openai_callback():

from langchain.callbacks import get_openai_callback with get_openai_callback() as cb: response = chain({"question": "Do you have a yearly plan?"}) print(cb)This is the result it would print:

Tokens Used: 297 Prompt Tokens: 247 Completion Tokens: 50Successful Requests: 1Total Cost (USD): $0.0004705In this case, I asked a simple question but provided the prompt with the context from my CSV document, which ended up as 247 tokens.

The GPT-3.5-Turbo model responded with 50 tokens of text response.

To be perfectly honest, I'm not sure how that $0.0004705 is calculated by LangChain because the official pricing formula for the model gpt-3.5-turbo-1106 that we used, as of January 2024, is this:

- Input: $0.0010 / 1K tokens

- Output: $0.0020 / 1K tokens

So, my calculation would be: 0.247 x $0.001 + 0.050 x $0.002 = $0.000347. A bit lower than the $0.0004705 provided by LangChain.

I guess this get_openai_callback() function also adds the cost for the embeddings I used in the same Python script, but I haven't found any way to check it.

But still, you get the idea about the rough cost: for the GPT-3.5-Turbo model, one chatbot request may be in fractions of dollar cents. Of course, it depends mainly on the size of the context you pass with the prompt.

Wait, What is a Token, Exactly?

Ok, so we have 247 tokens, 50 tokens, and a price for 1K tokens: what does that actually mean?

Quote from the OpenAI Pricing page:

"Multiple models, each with different capabilities and price points. Prices are per 1,000 tokens. You can think of tokens as pieces of words, where 1,000 tokens is about 750 words."

This is helpful, but only to an extent. Again, we can then calculate the approximate token amount for the rough cost. What if you want to calculate the token amount upfront?

There's a library called tiktoken which can help here. We can install it by running pip install tiktoken.

Here's the code:

import tiktoken query = "Do you have a yearly plan?"encoding = tiktoken.encoding_for_model('gpt-4')print(f"Tokens: {len(encoding.encode(query))}")Output:

Tokens: 7With that in mind, you can calculate the token amount at any time for any string before doing the OpenAI API call and without using LangChain.

From there, you can roughly estimate the cost of input based on the token price on the Pricing page.

The same goes for the cost of OpenAI Embeddings if you use them. You calculate the tokens for the overall string of documents you will embed and multiply it by the price of $0.0001 / 1K tokens as of January 2024.

No comments or questions yet...