I recently saw a few jobs on Upwork that involve splitting large CSV files into smaller ones. So, I decided to write this tutorial and show how to do it in Python.

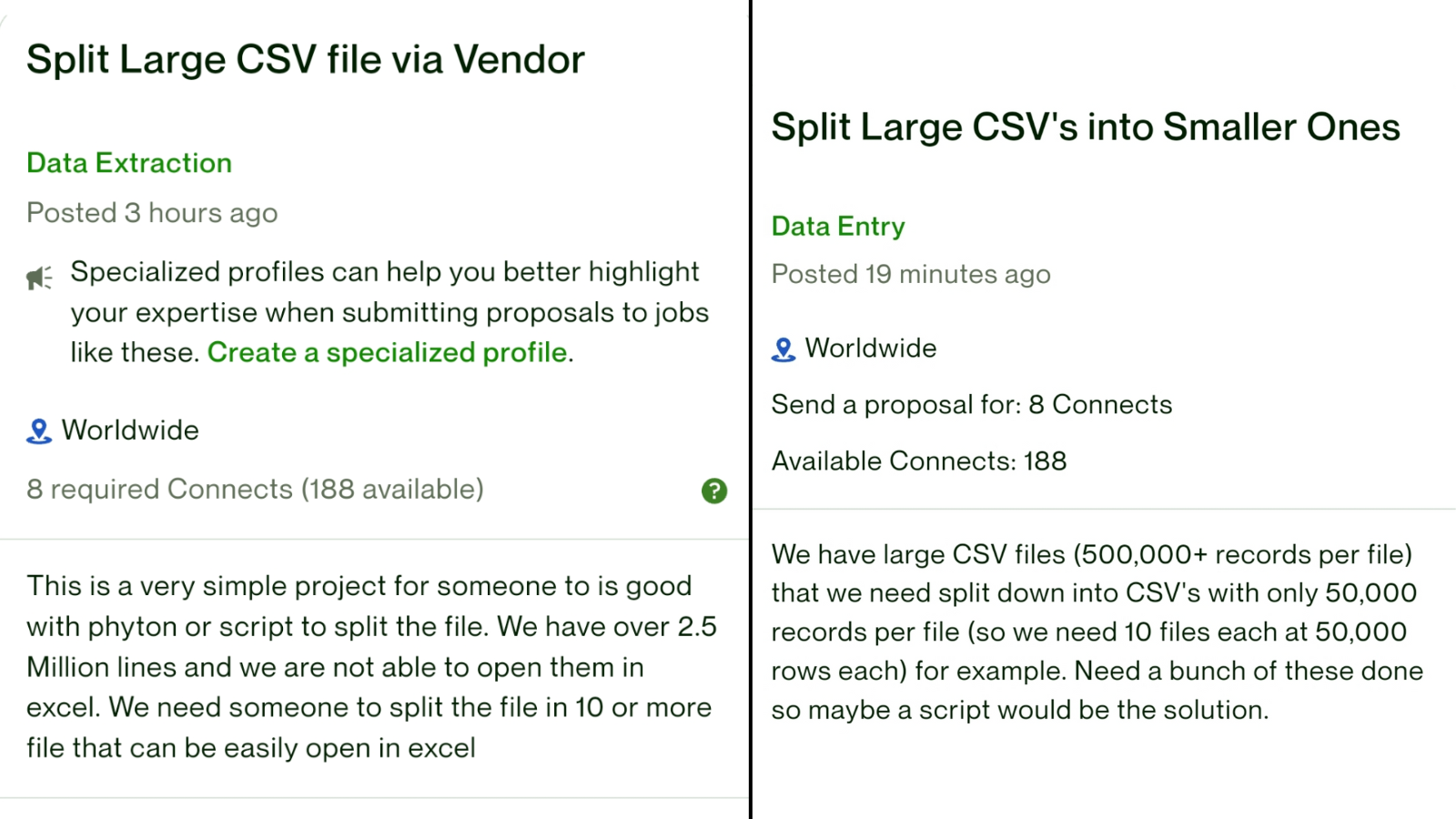

Here are the real jobs that you would get paid for:

Now, let's perform the task.

Imagine you have a CSV file with 500,000 rows. To split it, we define two functions:

- The function of reading the rows and building the "current" list until its size is 50,000

- The function to write that current list into a new CSV

import csv def write_csv(filename, data, header=None): with open(filename, 'w') as file: writer = csv.writer(file) if header: writer.writerow(header) writer.writerows(data) def split_csv(filename, num_rows, has_header=True): name, extension = filename.split('.') file_no = 1 chunk = [] row_count = 0 header = '' with open(filename, 'r') as file: reader = csv.reader(file) for row in reader: if has_header: header = row has_header = False continue chunk.append(row) row_count += 1 if row_count >= num_rows: write_csv(f'{name}-{file_no}.{extension}', chunk, header) chunk = [] file_no += 1 row_count = 0 if chunk: write_csv(f'{name}-{file_no}.{extension}', chunk, header)As you can see, we also have a few helper variables:

-

name- to build the salaries-1.csv, salaries-2.csv, etc. -

file_no- to build the salaries-1.csv, salaries-2.csv, etc -

header- to write that in each of the resulted files, on top -

chunk- the current chunk which is filled in until reading thenum_rowssize -

row_count- iteration variable to compare against thenum_rows

Now, we can call that main function with two parameters - the original filename and how many rows should be in each of the resulting files:

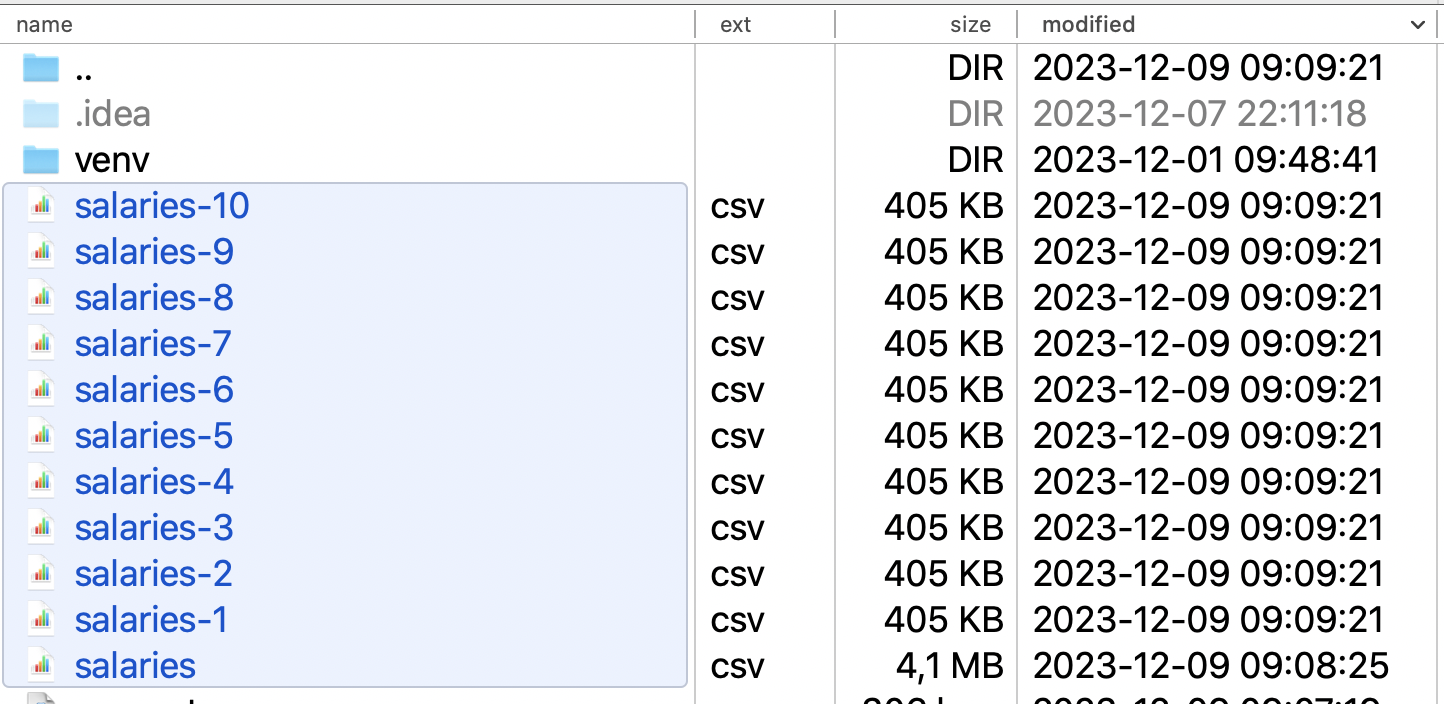

split_csv('salaries.csv', 50000)In this case, we have split a file with 500,000 rows into 10 files with 50,000 rows each:

No comments or questions yet...